“Mammoth Cyber isn’t promising a silver bullet—and that’s a good thing. Instead, it offers a pragmatic, integrated approach for organizations that are serious about Zero Trust.”

- August 22, 2025

Enterprise AI Browsers: Rocket Fuel for the Business—And a Loaded Gun Without Zero Trust

— Dr. Chase Cunningham, Strategic Advisor of Mammoth Cyber

Let me level with you. AI is blazing through the enterprise like a brushfire. Every board deck is splashed with “GenAI,” every vendor demo promises “copilots,” and every team has a skunkworks prompt library stashed away in Notion. It’s easy to get swept up in the hype and think the hard part is model selection or prompt crafting.

It isn’t.

The hard part is context and control.

Most AI is blind to what matters in your business right now. If an assistant cannot view the live state of your CRM, including the status of open support tickets, the last signed contract, or updates in the customer’s Slack channel, it can’t make reliable decisions. It’s stuck regurgitating historical patterns from training data—not the reality on the ground. That’s why the enterprise AI browser matters: it puts AI where your business lives—inside the operational glass of the browser—and it wires intelligence into the real-time context of your work. Done right, that’s rocket fuel for productivity. Done wrong, it’s an existential risk.

This post is a pragmatic map from someone who’s spent years telling people to assume breach and trust nothing, verify everything. If you’re going to put AI in the browser—where your teams click into CRMs, ERPs, HR systems, and financials—you must do it the Zero Trust way. There’s no “kind of secure” here. You either deploy with guardrails and policy, or you’re inviting a superhuman copy–paste engine to rifle through your crown jewels.

AI’s Real Blind Spot: Live Business Context

Traditional enterprise AI workflows take prompts in, spit text out, and hope you bolt on an API or two for “context.” That’s not enough. Context isn’t a flavor shot—it’s the main course. Suppose an assistant is going to help a seller prep for a call. In that case, it needs real-time access to the account’s purchase history, open opportunities, last-touch sequences, outstanding support tickets, and legal redlines. If it’s nudging finance on close, it needs the latest ledger, not Q2’s training data. In other words, the AI has to see the same operational canvas your humans do: the browser.

The browser is where knowledge work happens—SaaS consoles, internal portals, intranets, dashboards, forms, and workflows. When you connect AI directly into that environment, you flip the game:

- From “guessing” to understanding. The assistant reads the page, the fields, the context, and the state—right now.

- From theory to execution. It doesn’t just recommend; it can draft, update, post, reconcile, and route—under policy.

- From chatbot to coworker. It participates in the same systems your teams use and can move work forward responsibly.

- That’s the upside. Here’s the flip side.

The Existential Risk: AI in the Browser Without Guardrails

Put an unconstrained AI in the browser, and you’ve given a hyper-automated agent a master key. It can read anything the user can, click anywhere the user can, and move data faster than any human. That means:

- Data exfiltration at scale: Customer lists, pricing tiers, forecasts, internal legal docs—gone in seconds to some “untrusted destination.”

- Unauthorized actions: Silent edits to records, automated approvals you never intended, phantom comms “from” executives.

- Regulatory violations: GDPR/CPRA data drift, HIPAA exposure, SOX control bypasses—all without an obvious breadcrumb.

If that sounds dramatic, good. It should. A browser-embedded agent with no policy is a liability multiplier. The security model cannot be “hope the assistant behaves.”

Zero Trust AI: Apply First Principles Where the Work Happens

Zero Trust isn’t a logo or a feel-good slogan. It’s a set of operating rules:

Assume breach. Expect prompts to be tampered with and interfaces to be adversarial.

Verify explicitly. Continuously evaluate identity, device, app, data classification, and action type—per request.

Least privilege. Give the AI the minimum data and capability needed to complete the task—nothing more.

Segment and contain. Create trust circles for regulated apps and forbid cross-boundary data movement by default.

Inspect and log. Treat AI actions like privileged operations. Inspect inputs and outputs, and log everything for audit.

Bring those principles into the browser where AI operates, and you can unlock the value without detonating your risk register.

What “Enterprise AI Browser” Means (When It’s Done Right)

A lot of marketing slides will tell you that an “AI browser” is just a better front-end plus a sidebar assistant. That’s shallow. The enterprise-grade version is a policy enforcement layer that sits between AI and your SaaS/enterprise apps, understands context, and makes real-time decisions. Concretely, you need:

Authentication & Authorization. Strong identity, constrained sessions, and role-based access to data and actions. This is table stakes: an AI agent should inherit only the privileges you explicitly grant, not whatever the user can see.

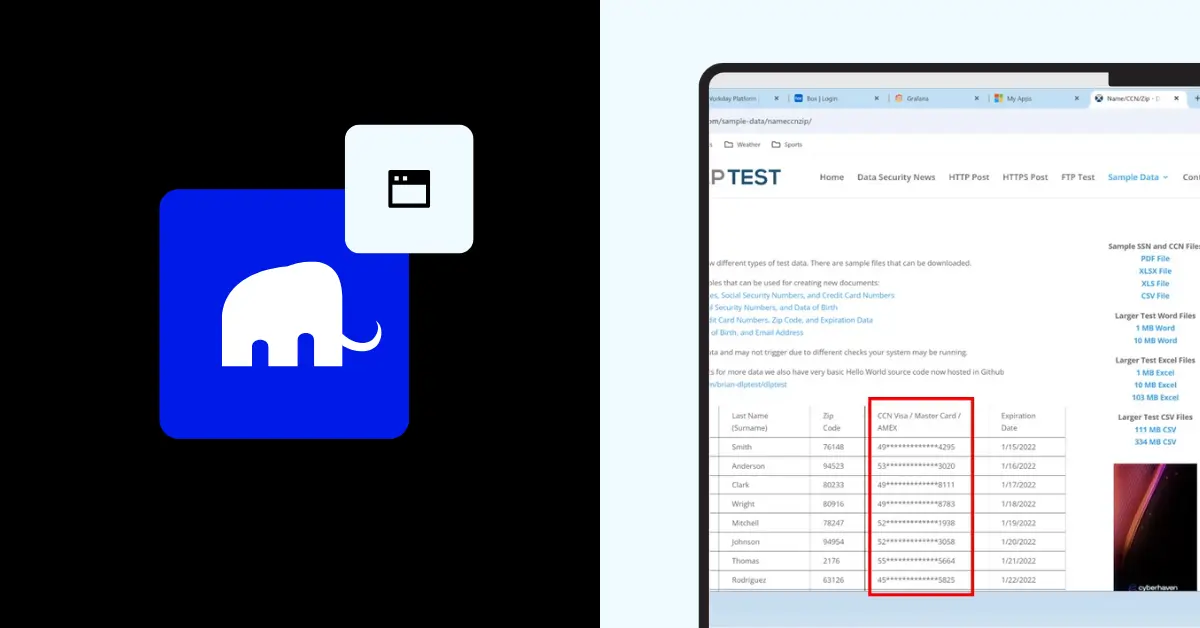

Data Loss Prevention (DLP). Inspect what the AI reads and what it attempts to output. Stop sensitive data from leaving trusted domains; prevent prompts/responses from carrying PII/PHI or secrets into untrusted models or tools.

Zero Trust Access. Wrap connections to internal apps; keep the AI in a controlled environment with least-privilege network paths. If the agent doesn’t have a business reason to touch a system, it simply can’t.

Trust Circles for regulated applications. Define enclaves (e.g., finance, healthcare, legal). Inside the circle: strict rules, masked data, auditable actions. Cross-circle movement: blocked by default unless explicitly allowed.

Compliance enforcement in real time. Detect and mask PII/PHI as the AI reads or composes, not after the fact—force minimization and redaction into every prompt and every response.

Comprehensive audit logging. Every action evaluated against policy, every decision recorded, every exception justified. Security gets visibility; compliance gets evidence.

Shadow AI detection and prompt-injection defenses. Surface unauthorized tools and malicious instructions, and stop them cold. This protects both the human and the agent.

When implemented, the result is a controlled, context-rich, execution-capable environment for AI—not a free-for-all. It’s the difference between “fingers crossed” and “provably governed.”

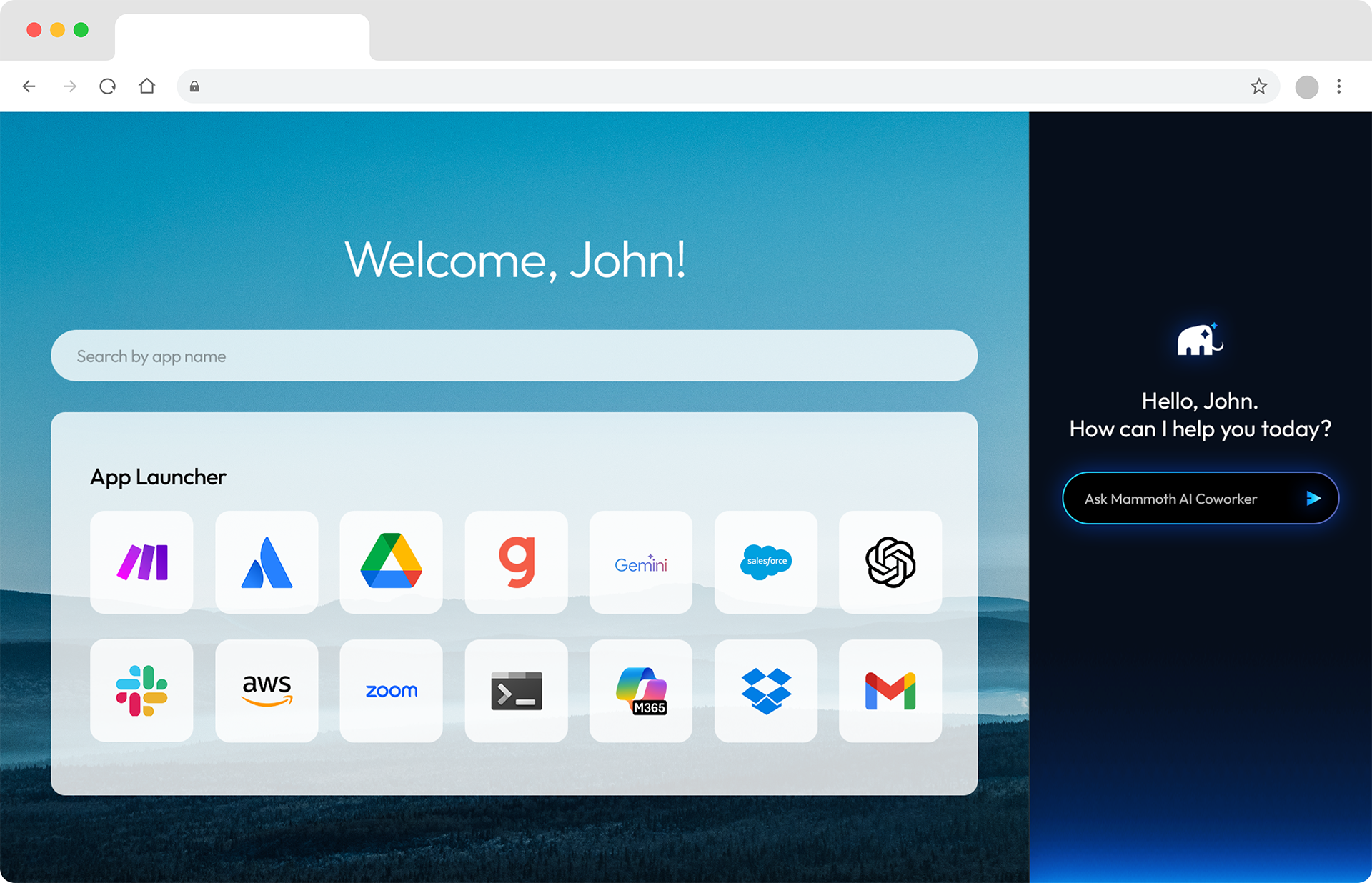

Example: The Mammoth Enterprise AI Browser frames this model explicitly—security-first, policy-driven, and context-aware—so AI can see just enough live business data to be valid while operating inside a controlled, logged, and compliant boundary. That’s the correct pattern.

From Chatbot to Coworker: The Right Use Cases

You don’t buy an enterprise AI browser to write haikus. You buy it to compress cycles on core workflows—under policy:

Sales preparation: The assistant reviews a customer’s CRM profile, open opportunities, and prior communications, but only reveals fields the rep is cleared to see. It drafts the call plan and updates next steps—no data leakage, no overreach.

Finance & ERP: It generates compliance-ready reports, tied to the current ledger state, with masked PII and immutable audit trails.

Support resolution: It proposes ticket responses using the live knowledge base, recent defect notes, and the customer’s environment data—with redaction policies baked in.

HR onboarding: It automates checklists across HRIS, payroll, and identity systems while respecting departmental trust circles and least privilege.

The pattern is consistent: context in, control throughout, execution under policy.

Architecture: A Zero Trust Pattern for AI in the Browser

When I talk to architects, I draw the same picture:

Enterprise AI Browser (EAB) is the user/agent workspace. It renders apps, reads DOM/state, and mediates actions.

Policy Decision Point (PDP) evaluates every AI-performed read/write against identity, device, app, data class, and action type. Results: allow, transform (mask/minimize), require human-in-the-loop, or deny.

Data Classification & Redaction Engine inspects page content and prompts/responses in real time; applies DLP and PII/PHI masking.

Trust Circles & Segmentation enforce per-domain boundaries: finance ≠ , marketing ≠ , R&D. Cross-circle is blocked unless justified.

Zero Trust Access Gateway brokers private app access, short-lived credentials; no broad lateral paths.

Observability & Audit stream events to SIEM/LOD. Every decision is explainable.

Model Abstraction Layer keeps you model-agnostic; you can switch or mix models without ripping out controls.

Human-in-the-Loop (HITL) Guardrails for sensitive actions (wire transfers, contract terms, HR changes).

This is how you get speed without surrender—the AI acts quickly where safe, and asks for human review where risk spikes.

Day‑One Controls: The Non‑Negotiables

If you’re piloting an enterprise AI browser next quarter, ship with these guardrails turned on:

Read-minimize: The agent can only access fields required for the current task.

Write constraints: Sensitive writes (e.g., price, pay, policy) require explicit human confirmation.

DLP both ways: Inspect inputs and outputs. Prevent “copy” into prompts from regulated pages and “paste” of responses into untrusted destinations.

Trust circles: Finance, legal, and health data are ring-fenced. Cross-circle data movement is denied by default.

PII/PHI masking: Auto-detect and mask on read and on compose—before anything reaches a model.

Prompt injection shields: Strip or flag adversarial instructions embedded in pages, tickets, or docs.

Shadow AI detection: Block unapproved model calls, rogue extensions, or side-channel exfil.

Cost controls: Pool tokens and centralize model usage to reduce spend and improve observability. Model choice is a policy, not a user preference.

What Good Looks Like: Metrics That Matter

You can’t manage what you don’t measure. Track:

Policy coverage: % of apps and workflows under enforced policy.

Action disposition mix: allow vs. transform vs. HITL vs. deny.

DLP saves: sensitive attempts blocked; prevented exfil volumes.

Injection defense hits: prompt injection attempts detected/neutralized.

Shadow AI detections: unapproved tools/models surfaced and blocked.

Time-to-value: cycle-time reductions per workflow (lead-to-first-call, ticket-to-resolution, close-to-book).

Cost per task: AI inference cost normalized by successful task completions (token pooling helps here).

These numbers tell leadership a clear story: we’re getting more done, faster, with less risk—not more.

Anti‑Patterns to Kill Before They Kill You

“Just block AI in the browser.” You’ll get shadow AI anyway, and you’ll lose the productivity upside. Control beats prohibition.

“Let the AI see everything; it’s smart.” Intelligence without constraint is a liability. Least privilege applies to machines, too.

“We’ll fix it in fine‑tuning.” Fine‑tuning doesn’t solve policy or data lineage. Guardrails must live in the execution environment.

“We can audit later.” If you didn’t log it, it didn’t happen. Auditable trails aren’t optional in regulated enterprises.

“One model to rule them all.” Be model‑agnostic. Use the right model per task and keep controls consistent at the browser/policy layer.

The Adoption Path: Crawl → Walk → Run → Autonomy (Under Policy)

Crawl (Observe): Put the enterprise AI browser in read‑only assist mode. Log everything. Map where PII/PHI and secrets appear.

Walk (Assist): Enable low‑risk write actions with HITL. Turn on masking and trust circles.

Run (Automate): Allow autonomous actions in bounded workflows (e.g., ticket triage, report generation) with rollback hooks.

Autonomy (Constrained): Expand to higher-value tasks where the risk is well‑understood and controls are proven. Keep HITL for the irreversible, high‑impact steps.

This is not “big bang.” It’s progressive hardening with measurable wins at each step.

Why an Enterprise AI Browser Is Also a Finance Story

Security is the oxygen; cost is the gravity. Model licenses and tokens add up, especially when every team is hitting APIs independently. Centralizing AI usage through the enterprise browser lets you pool tokens and enforce policy‑based model routing (cheap models for summaries, premium for complex reasoning), dropping your TCO while improving control. That’s not a nice‑to‑have; that’s how you sustain the program past the pilot.

The Bottom Line from Dr. Zero Trust

The future of enterprise AI isn’t a disconnected chatbot living in a side panel. It’s an embedded, context‑aware operating layer that understands your live business and can execute—under policy—inside your workflows. The enterprise AI browser is the bridge. It’s how you turn AI into a governed coworker instead of a liability.

But let’s be crystal clear: without Zero Trust guardrails, an AI‑enabled browser is an existential risk. It will see too much, move too fast, and leave too few traces. With Zero Trust guardrails, it becomes the best teammate your business has ever had—fast, compliant, and accountable.

So take the win and control the risk:

Put AI where the work is—the browser.

Wrap it in real‑time policy, DLP, trust circles, and compliance enforcement.

Log everything, measure what matters, and grow autonomy by proof, not by vibes.

Stay model‑agnostic and centralize cost control.

Do that, and you’ll get what everyone promised but few can deliver: AI that runs the business—without running you off a cliff.

TL;DR (But Please Don’t Only Read This)

AI in the enterprise fails without live context; the browser is where that context lives. An enterprise AI browser can transform AI from “chatty” to “capable,” but only if you implement Zero Trust guardrails: least‑privilege access, DLP on inputs and outputs, trust circles for regulated apps, real‑time PII/PHI masking, and exhaustive logging. Do it right and you unlock speed and savings (token pooling, centralized policy) while reducing risk. Do it wrong and you’ve built a self‑service data‑exfil machine. Choose wisely.

If you want a mental model to audit your readiness, start with this question: “Can our AI agent explain—line by line—what it did in the browser, what data it touched, why policy allowed it, and where the output went?” If the answer is no, you don’t have Zero Trust AI yet.

Chase Cunningham

Ready to leave VDI behind?

Explore how the Mammoth Enterprise Browser secures GenAI development workflows and accelerates developer velocity—without compromise.

Don’t miss these

Subscribe to our

monthly newsletter

Be the first to know what’s new with Mammoth Cyber. Subscribe to our newsletter now!

Follow us

© 2025 Mammoth Cyber. All rights reserved.

EULA | Terms | Privacy Notice